Linear regression is a statistical technique to fit a best line through 2D (x,y) data. That is, it finds a line that predicts the y value (dependent variable) using the x value (independent variable). More formally, given data yi, xi, one is trying to find m and c to fit a line of the form y=mx+c. The best values of m and c can be calculated using:

m = σxy / σ2x

c = μy − m μx

where μx and μx are the arithmetic mean of x and y respectively, σ2x is the variance of x, and σxy is the covariance of x and y

m = σxy / σ2x

c = μy − m μx

where μx and μx are the arithmetic mean of x and y respectively, σ2x is the variance of x, and σxy is the covariance of x and y

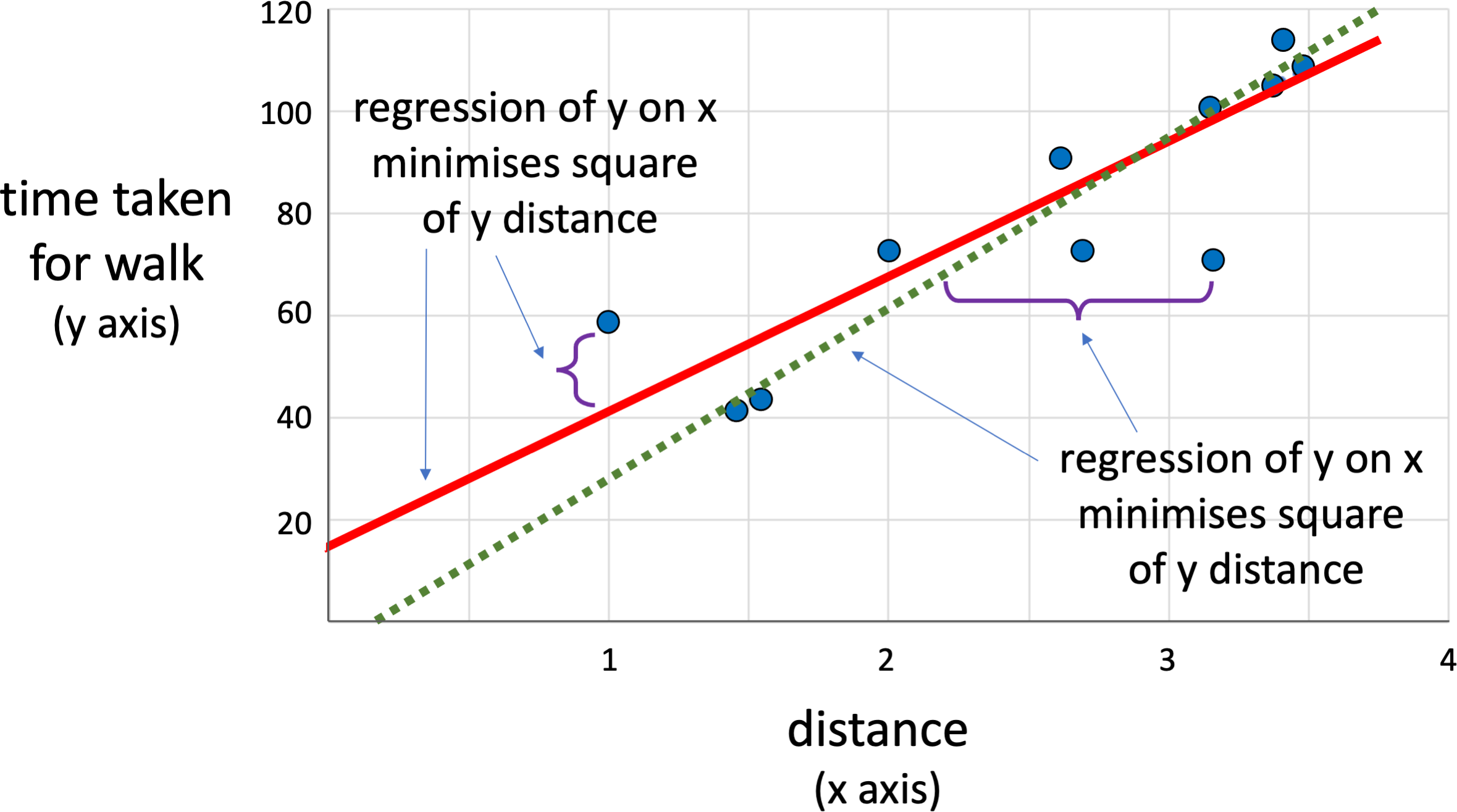

Note that the idea of 'best' here means leasr sqaures, that is minimising the sum of the squares of the y error:

Σ (yi − mxi+c)2

You get different answer if you swop the variables and look for the regression of x on y.

Σ (yi − mxi+c)2

You get different answer if you swop the variables and look for the regression of x on y.

When there is more than one independent variable, there is an extension, multi-linear regression, which instead fits a hyperplane) to the data.

Used in Chap. 7: pages 88, 89, 94, 96, 98, 100; Chap. 8: page 105; Chap. 10: page 138; Chap. 14: page 215

Linear regression for short walks: solid line ignoring the outlier, dotted line including all data.

Linear regression as least squares – different ways to do it